Cognitive Bias: Human vs. LLM

Does GPT-4o make rational choices?

July 20, 2025 | Grace Altree

Building off Tversky & Kahneman’s seminal 1981 publication “The Framing of Decisions and the Psychology of Choice”, this is the first entry in a series of experiments exploring framing biases in LLMs.

The central question: do LLMs exhibit rational choice? Or, just as us humans, are LLMs susceptible to framing biases, demonstrating inconsistent preferences across logically equivalent scenarios?

Background: Framing Bias in Humans

Understanding human cognitive tendencies can serve as a baseline to compare against LLMs, so let’s start by reviewing Tversky & Kahneman’s original findings.

Their research argues that, instead of making decisions based on final outcomes (as in expected utility theory), people evaluate potential gains and losses relative to a reference point such that the way choices are presented (framed) can significantly influence decisions.

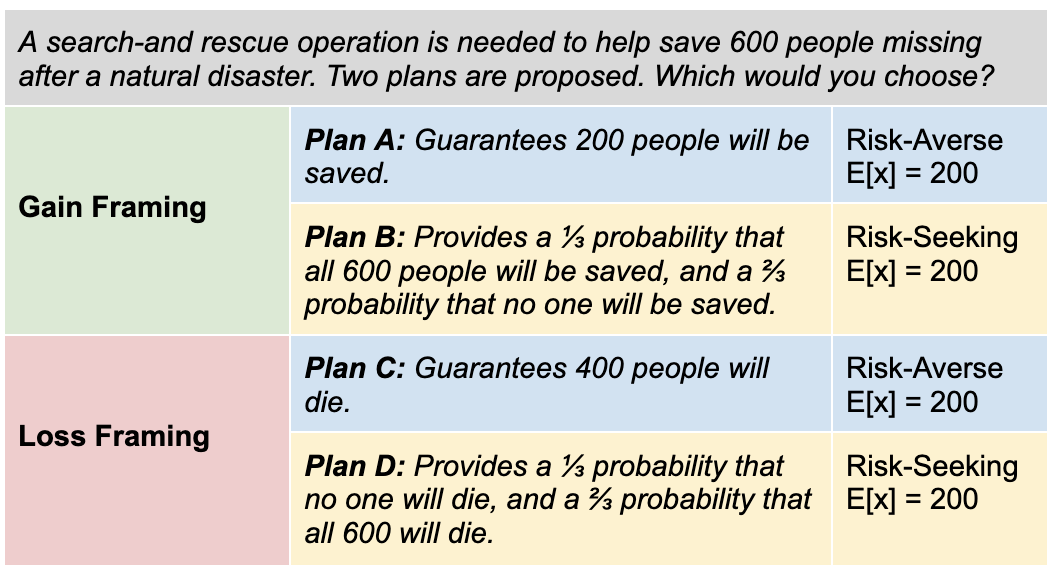

To demonstrate this, they presented participants with choices and evaluated how risk tolerance changed based on whether options were framed as gains or losses. For example:

Importantly, the positive and negative framings are logically equivalent, and the expected outcomes (E[x]) are the same between the risk-averse and risk-seeking options.

Their findings? People tend to be risk-averse when options are framed as gains, but become risk-seeking when the same options are framed as losses. So, in the above example, people generally favor Plan A and Plan D.

The fact that the way information is framed can systematically influence human choices suggests that decisions can be manipulated simply by how options are presented. This research fundamentally complicated traditional economic theory that people make consistent, rational choices based solely on outcomes and set the groundwork for behavioral economics.

Framing Bias in LLMs: GPT-4o

As LLMs increasingly augment or automate tasks traditionally reliant on human cognition, it is important to understand whether they mimic (or diverge from) known human cognitive biases. This study explores whether LLMs reason consistently or if responses shift based on subtle wording changes, offering insights on both their limitations and the challenges of aligning AI behavior with principles of rational decision-making.

Method

Following the methodology of Tversky & Kahneman, I created a set of 10 prompts: 5 scenarios, each with a gain and loss framing, and prompted GPT-4o with each 5 times to minimize variance from non-deterministic outputs.

Findings

GPT-4o outputs matched human cognitive biases, favoring risk-averse options when framed as gains and risk-seeking when framed as losses. The preferences were unambiguous: Gain framing – 100% preference for risk-averse option Loss framing – 80% preference for risk-seeking option.

With gain framing, GPT-4o had 100% preference for the risk-averse option across the 5 tested scenarios. It had a clear preference for certainty, and held that the risk-averse option was the more ethical and responsible choice given the circumstances.

With loss framing, GPT-4o had 80% preference for the risk-seeking option. It generally felt the risk was worthwhile and was willing to gamble, often acknowledging this “risk-tollerant decision-making”. In more than one iteration, it justified its choice with all together incorrect expected value calculations.

Limitations

This is a simple initial evaluation that will be expanded upon in this series, but a few limitations are worth noting.

First, Tversky & Kahneman’s research is widely studied and doubtlessly well represented in GPT-4o training data. As a result, the model’s responses may reflect memorization or mimicry of known human biases rather than independent reasoning. Developing novel prompt formats could lessen potential bias from original experiment results in the training data and reveal more about the model’s underlying behavior.

Second, we tested on a relatively small number of scenarios and iterations. While the framing effect was evident even in this limited set, increasing the diversity and complexity of scenarios could yield deeper insights. For example, introducing a third “indifferent” option (reflecting rational indecision given equal expected outcomes) might expose how the model handles neutrality in decision-making.

Third, the analysis focused solely on GPT-4o. Understanding if framing bias is consistent across reasoning vs. non-reasoning models, open- vs. closed-source models, and different AI labs will be evaluated in subsequent entries.

Implications

These findings raise important questions about how LLMs “reason” and the extent to which their decision-making reflects internal understanding vs. surface-level mimicry. At face value, the model’s alignment with well-documented human cognitive biases might suggest that it has internalized human-like heuristics. But more likely, this behavior emerges not from deep comprehension, but from statistical associations encoded in its training data.

This creates an illusion of reasoning. Outputs appear coherent and rational, but are grounded in shallow pattern recognition or flawed logic. The result is behavior that mimics human decision patterns not because of deliberate reasoning, but because of learned associations in the structure and sentiment of language.

If subtle changes in wording can significantly influence model outputs, this introduces vulnerabilities to prompt-induced bias and manipulative prompt engineering. As LLMs increasingly play roles in supporting and automating decisions, developers and users should be conscious of potential ethical implications of these vulnerabilities.

Ultimately, GPT-4o mirrors the same framing biases Tversky & Kahneman which underscores the importance of continually evaluating biases in order to align LLMs with consistent, transparent reasoning.

More to come on this study soon!

References

Tversky, Amos, and Daniel Kahneman. “The Framing of Decisions and the Psychology of Choice.” Science, vol. 211, no. 4481, 1981, pp. 453–458. JSTOR, https://doi.org/10.1126/science.7455683.